This week I’ve been lucky enough to free enough time to attend SplunkLive! at the Intercontinental Hotel in London. I’m a big advocate of getting out of the office, and away from the work ‘bubble’ to educate myself on product developments, and networking with other like-minded individuals to see what their focus is on, and of course – what technologies they are using.

Data is key nowadays, and how businesses use that data, to thwart a potential attack is very interesting. What is more interesting for me though is how we can use the masses of data to add value back to the business through data analysis.

The event is a 2 day event, with a typical format. The first day being more focused more on training, the second day more on keynotes, talks, and networking.

My information security colleagues will focus more on how to use the platform more efficiently, and add better intelligence to the data they are ingesting whereas my focus is more around the service intelligence piece, where we could potentially use the platform in the monitoring space, an area of technology which is fast moving, especially with the introduction of AIOps. For those not in the know (and I don’t claim to be!) essentially AIOps is a term used to describe how artificial intelligence can be used to enhance the data that your nodes (servers, containers, apps, network equipment, etc) is producing and providing some meaningful output. The output can be used to predict failures or issues within your environments and then act (without human intervention) on those outputs in real-time.

Traditional monitoring platform have generally taken in data using protocols such as SNMP, WMI amongst others and products such as Solarwinds, Nagios, PRTG were all common in most enterprise IT teams. Time has moved on, and now we have far more tools at our disposal with the introduction of APIs which often provides that data in a far more structured way. This makes it far easier to query this data and therefore detect patterns to extract meaningful data.

Most of the above sounds like some type of sales pitch (which I’m trying to avoid – sorry!), but what I’m trying to say is that not all IT hardware, or apps output data in the lovely structured format that a SIEM such as Splunk, or LogRhythm can make sense of out-of-the-box, and that is where the challenge comes. A lot of work is needed to normalise that data and focus in on the parts that add value, especially if you’re taking in 100+ Gbs of data on a daily basis.

My employer Stagecoach are very much on a journey of ensuring logs are all shipped to Splunk, and many of our information security team are going through formal training to get the skills to get the most value out of the product. We’re already seeing some fantastic output from them, so I’m hoping the next couple of days shows us some great new features and the networking sheds some light on how others are using the platform.

Day 1 – Splunk4Rookies

The day for me started off with a Splunk4Rookies session, which was a well organised hands-on session teaching how to get data into Splunk, how to analyse it and create various dashboards from web logs (such as Apache), ranging from geolocated maps of source IPs and comparing that with the status codes. Very common user cases, but a perfectly good example of how you could potentially diagnose an issue with your web apps to diagnose issues.

They even went as far to show the predict command which does what it says on tin, and predicts the future based on the data it already has. I wasn’t aware of this, so was suitable impressed on how easy the forecasting was, so well done Splunk.

The evening was dedicated to Boss of the SOC/NOC, an event to test teams to resolve issues using the full Splunk toolset. Good fun and useful elements to learn in there too. Usually I’m disappointed with the ‘training’ days at these events, but this was a enjoyable one.

Day 2 – Keynote and Sessions

The second day begun with a strong keynote and some good case study examples from Airbus, Lloyds Banking Group and Bank of England.

In typical playful Splunk style their big announcement was a new F1 themed t-shirt. In all fairness to their marketing department, it’s superb even if it is very close to the wire!

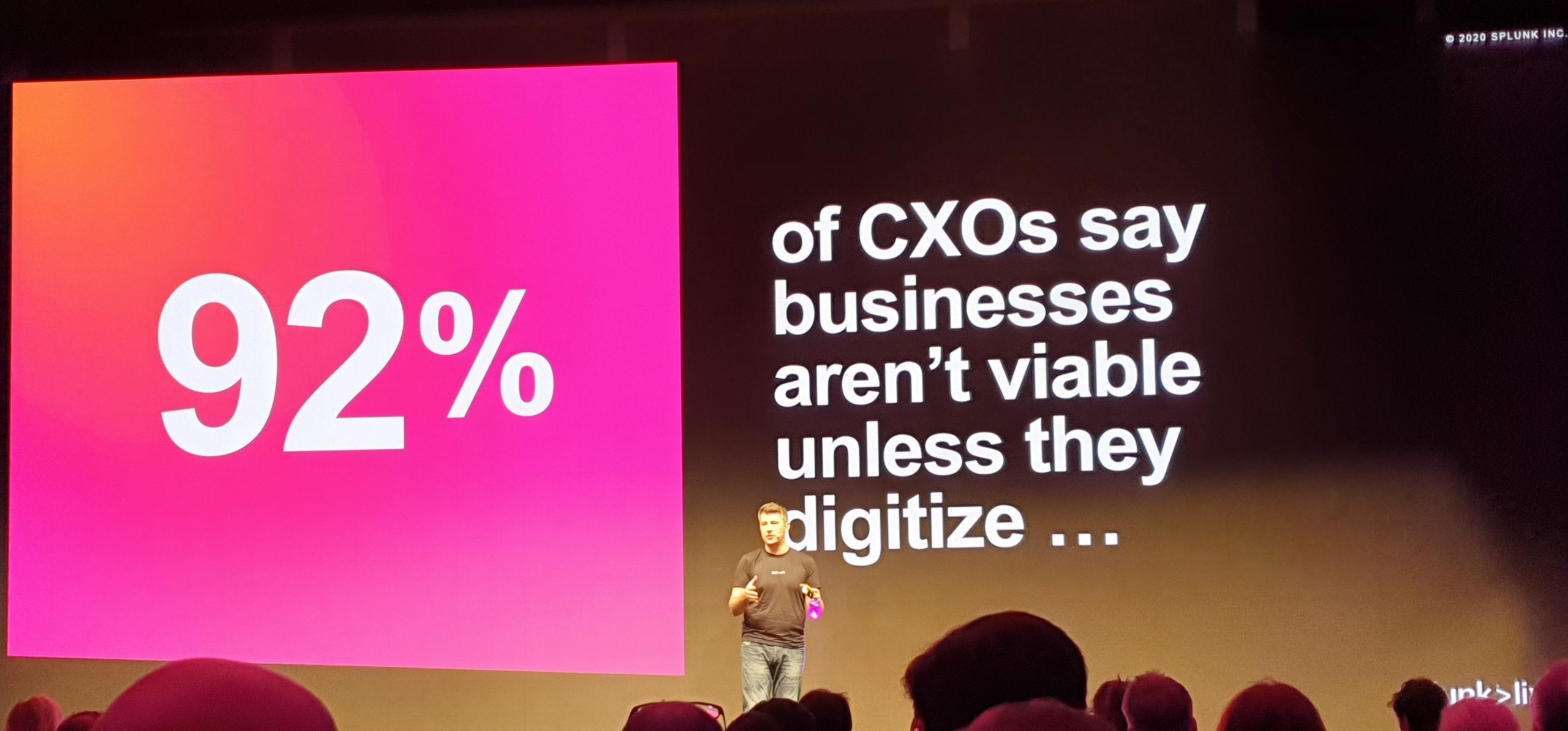

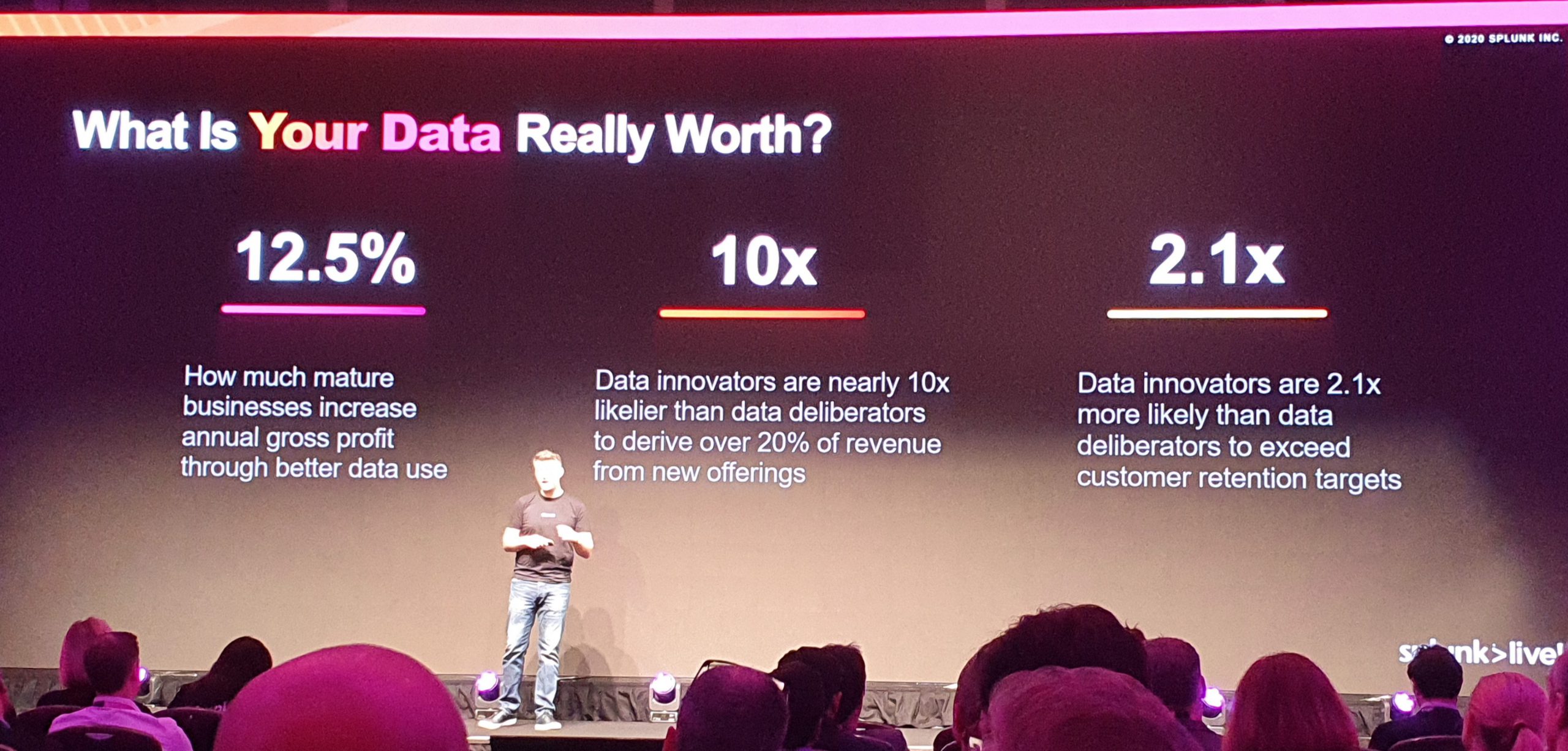

Early focuses were on how the world has begun the data age, the fourth revolution and how the world can utilise data to improve people’s lives. It moved swiftly on to how data empowers people to make quicker and better decisions, and utilised some interesting statistics. This was quite close to home, as my employer Stagecoach are empowering our Technology teams to not only improve efficiency across the transport industry but to provider a simpler format for our customers.

The transportation sector isn’t unique in that a digital transformation is in its early stages, but in a world where technology means that people don’t meet face-to-face as much, encouraging human interaction is a real challenge as technologies such as whatsapp, facetime, etc are more commonplace than grabbing a cup of tea (or coffee) with a friend.

Following on from this was an interesting talk from Lloyds Banking group and how they’ve used Splunk to drive their modernization from a bricks and mortar bank to a digital bank. They embed Splunk in all levels of their regular code pushes and provides their infrastructure and security monitoring too. Certainly an interesting insight into modern ways of using a SIEM such as Splunk.

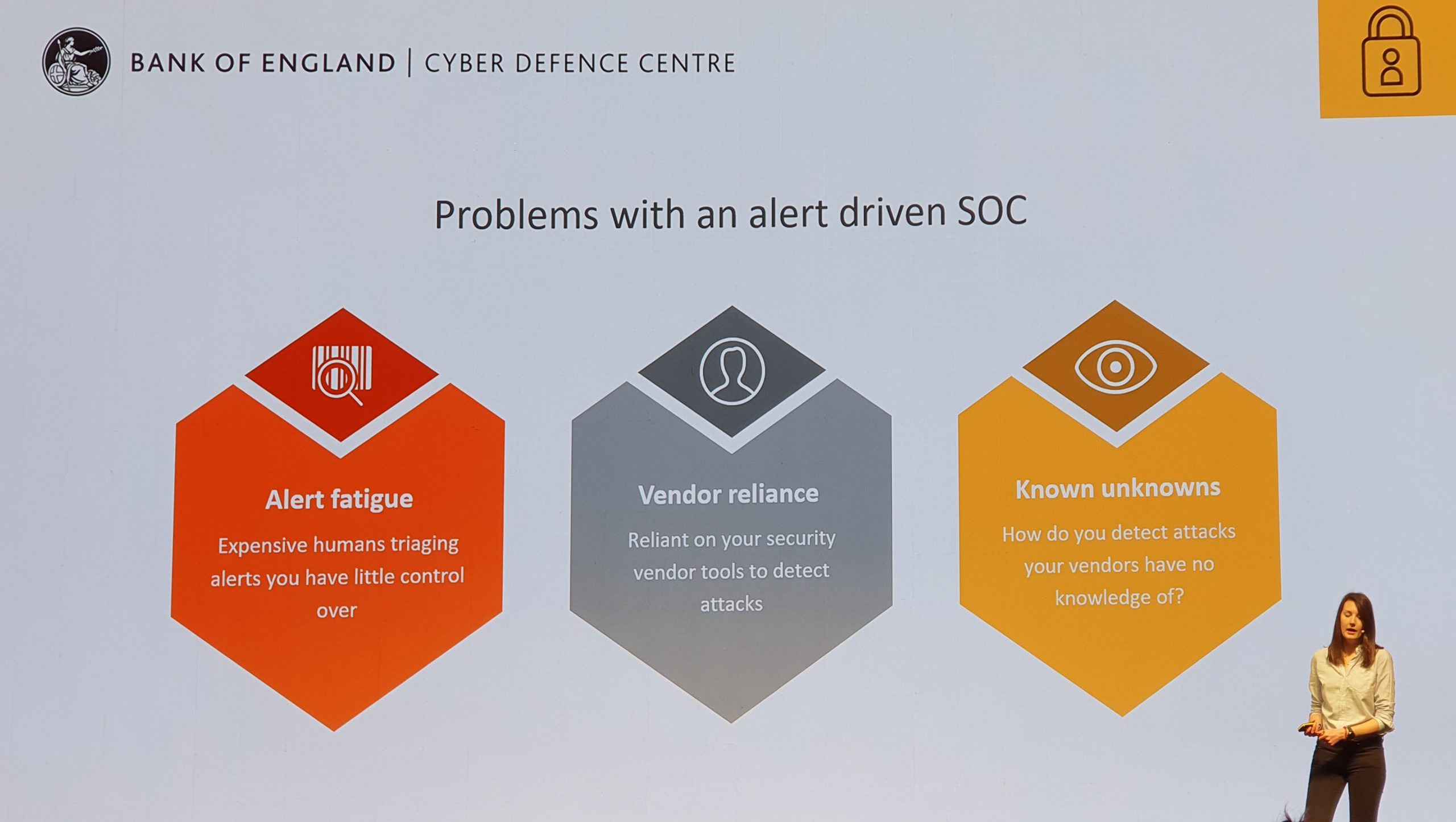

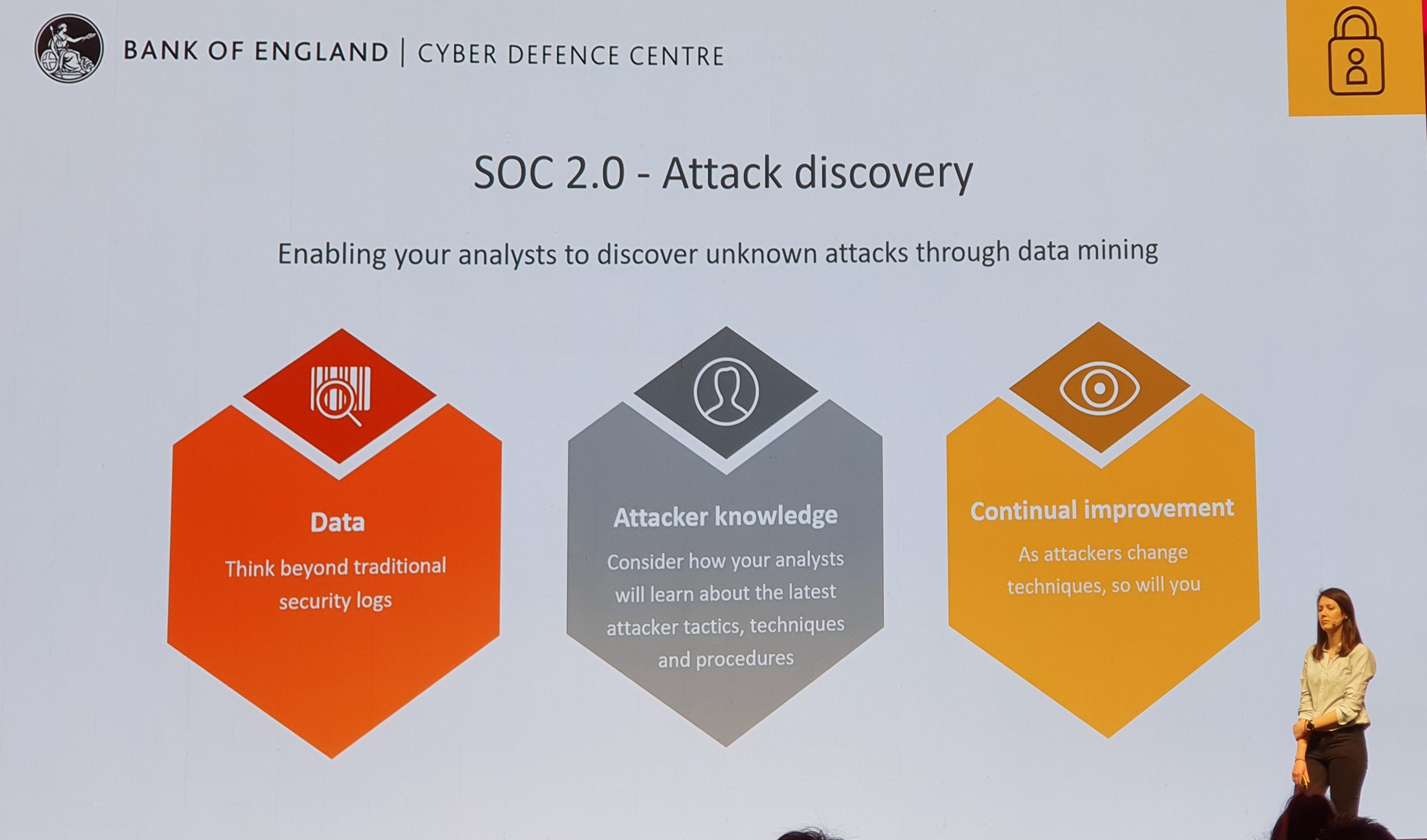

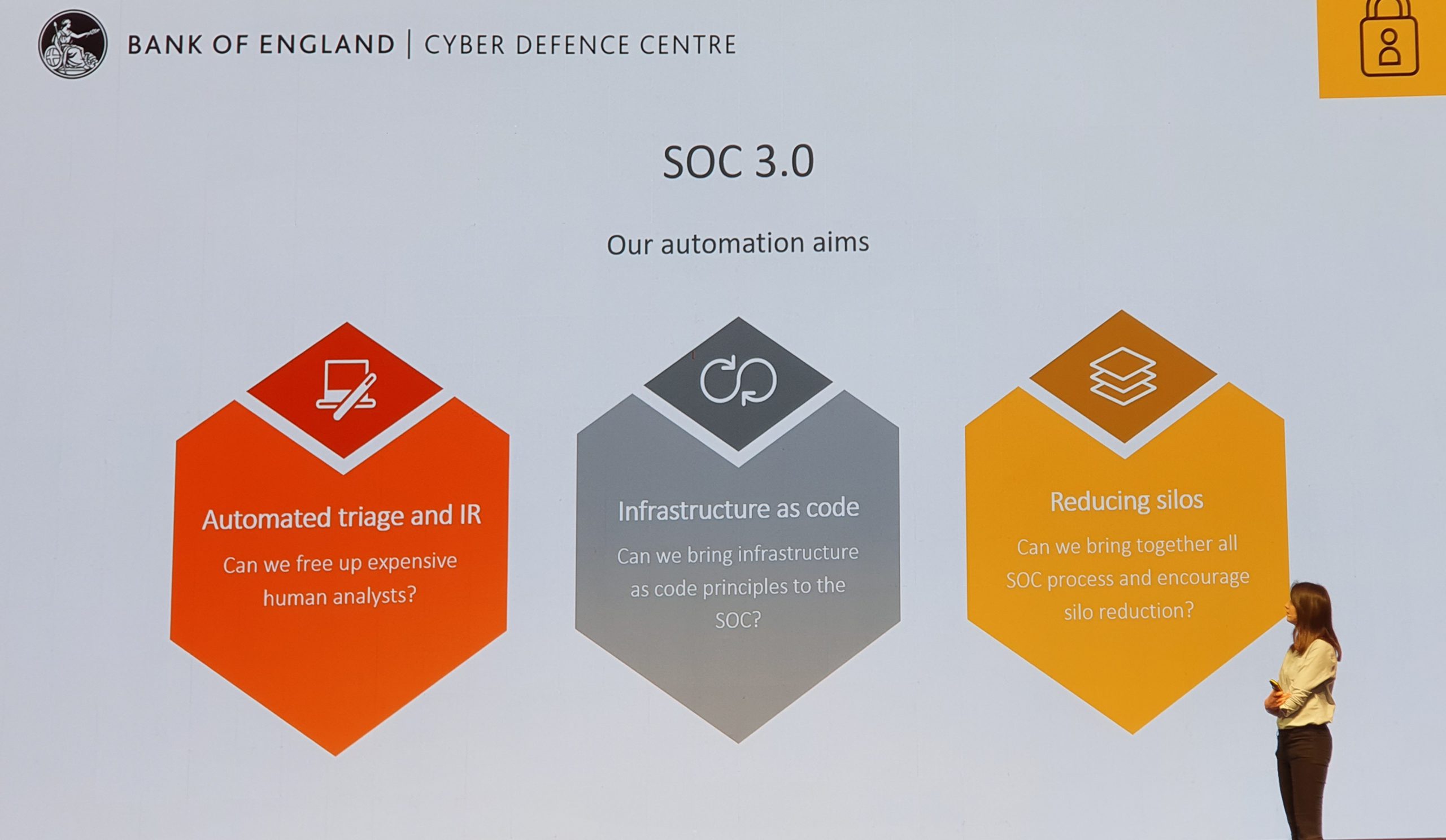

The highlight of the sessions was the Bank of England and how their cyber defence team of just 12 people uses Splunk in their SOC. They brought Jonathan Pagett and Carly West who did a superb job of explaining how their SOC has developed from manually responding to alerts in a mailbox right the way through to fully automated responses. It was a fantastic insight in how an establishment such as the Bank of England has gone from just responding to alerts their tools would alert on, to continually writing their own detection mechanisms to respond to ever-evolving attacks.

They used the concepts of 1.0, 2.0, etc to mark stepped changes in the way they use their toolsets to detect and protect their environments. It was certainly a great insight on how adopting the correct tool, recruiting the staff with the correct mindsets, and a clear roadmap could achieve a huge amount with such a small team.

Last up was Airbus who are putting through over 20Tb a day of date through their platform and it is used not just as a security tool, but as a business tool. They have over 1000 users across their business, using Splunk to monitor over 200,000 assets and provide insights and automation using the Splunk Phantom platform.

The afternoon for me kicked off with a security focused session. All around aligning outputs with the MITRE framework.

A really good demo of the Splunk Security Essentials app on how to get started with Splunk, in particular how to detect issues from the data in Splunk. Pre-written queries allow you to alert almost immediately on events such as unusual lateral traffic, or service accounts authenticating on machines that aren’t ‘normal’. Not complex queries by any case, but valuable for people just getting to grips with the product.

The last session of the day was guidance on how to create glass tables and valuable visuals from your data. I’m a sucker for a good looking web app, especially if it’s got dark mode (and Splunk does!). Some good examples how you can pull data in from various indexes and sources and display it all on a (here goes a marketing term) single pane of glass. In all fairness, very slick, and assuming you know your search terms, and you know what you want, its a very powerful tool.

And that pretty much rounds up the two day event in London. A very enjoyable and informative event, and with the imminent thread of coronavirus, probably the last for a good while. Thanks again to everyone at Splunk for a great event, I’m sure I’ll be there again next year.

Leave a Reply